International Board Group

Get in Touch With Us

Office Address

Bank of the West Tower - 500 Capitol Mall, Sacramento, CA 95814

Email Address

Telephone

(650) 250 - 5845

Cerebras Challenges Nvidia: Redefining AI Inference with Cutting-Edge Technology

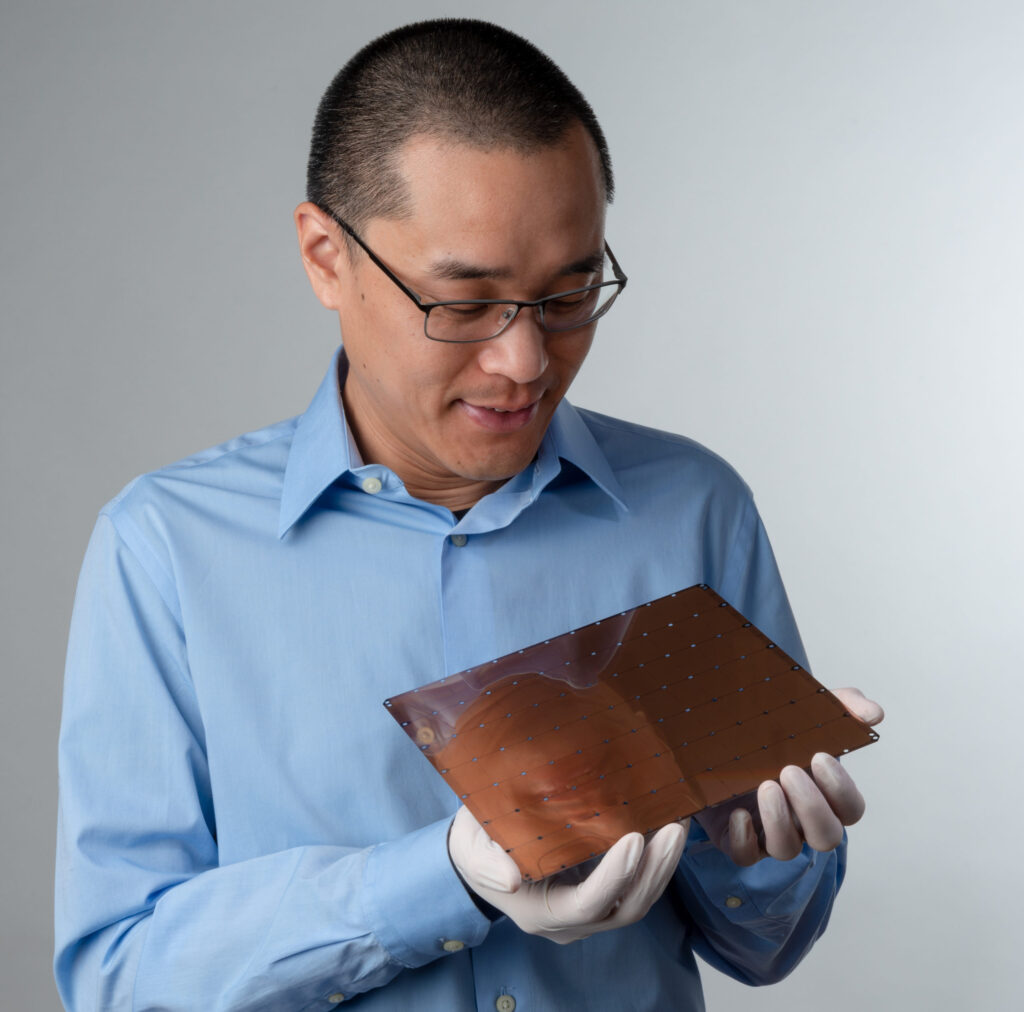

In a rapidly evolving world where artificial intelligence (AI) is becoming a cornerstone of enterprise innovation, the battle for dominance in AI hardware is heating up. Enter Cerebras Systems, a startup challenging the AI hardware giant Nvidia with a bold new approach—one that could redefine the future of AI inference. At the center of this challenge is Cerebras’ Wafer-Scale Engine (WSE), the largest computer chip ever built, specifically designed to handle the immense demands of AI workloads.

Cerebras’ latest development, running Meta’s open-source LLaMA 3.1 AI model directly on its giant chip, promises significant gains in performance, cost-efficiency, and scalability. This new capability positions Cerebras to not only compete but potentially outpace Nvidia’s GPU-based solutions, which have long been the standard in AI inference. With claims of dramatically lower costs and energy usage, Cerebras is making a compelling case for its AI architecture as a game-changer in the world of high-volume AI applications.

The Game-Changing Power of Wafer-Scale Inference

The AI community is familiar with chatbots and complex models generating inferences in real-time, yet the infrastructure powering these inferences often exists in massive cloud data centers. Cerebras’ innovation is revolutionary because it brings the AI model onto the chip itself, removing traditional bottlenecks associated with data movement between GPUs and memory. This integration could fundamentally change how AI models are deployed and scaled across industries.

Cerebras asserts that their WSE delivers inference at one-third the cost of traditional platforms, like Microsoft’s Azure, and requires only one-sixth of the power. This efficiency is not only a technical marvel but a practical solution for enterprises facing high costs and energy consumption challenges in their AI initiatives.

According to Micah Hill-Smith, co-founder and CEO of Artificial Analysis Inc., “With speeds that push the performance frontier and competitive pricing, Cerebras Inference is particularly compelling for developers of AI applications with real-time or high-volume requirements.”

Ripple Effects Across the AI Ecosystem

The impact of Cerebras’ advancements could create a ripple effect throughout the AI industry. Faster and more efficient AI inference could unlock new applications in fields where latency and performance have previously been limiting factors.

For example, in natural language processing, improved inference speeds could enhance customer service automation by generating more nuanced, context-aware responses. In healthcare, the ability to process large datasets faster could lead to quicker diagnoses and more personalized treatment plans. In the business world, real-time analytics powered by AI would give companies the ability to make faster, data-driven decisions, providing them with a competitive edge in dynamic markets.

As Jack Gold, founder of J. Gold Associates, noted, “Wafer scale integration from Cerebras is a novel approach that eliminates some of the handicaps that generic GPUs have and shows much promise.” However, he cautioned that Cerebras is still a startup navigating a landscape dominated by tech giants like Nvidia.

Inference as a Service: A New Paradigm

Cerebras is also introducing AI Inference as a Service, offering organizations the ability to deploy their AI models with unprecedented speed and efficiency. By integrating models such as Meta’s LLaMA 3.1 directly onto the WSE, Cerebras claims to drastically reduce inference times. For instance, while state-of-the-art GPUs might process 260 tokens per second for an 8-billion-parameter LLaMA model, Cerebras reports a stunning capability of processing 1,800 tokens per second.

This speed could enable real-time applications in industries that demand rapid AI-driven insights. For businesses, faster inference could translate into more responsive AI tools for tasks like market analysis, customer behavior insights, and operational decision-making. The capability to analyze and act on data in real-time could usher in a new era of AI-powered business strategies.

Breaking Traditional Bottlenecks in AI

The traditional challenge of AI inference lies in the bottleneck between GPU memory and compute cores. In many systems, AI models are stored in separate memory banks, requiring data to move back and forth between compute cores and memory, slowing down overall performance. Nvidia and other providers have attempted to address this with advanced configurations, but limitations remain.

Cerebras’ Wafer-Scale Engine sidesteps this bottleneck by integrating both compute cores and memory onto a single wafer, reducing the need for data transfer. Each of the 900,000 cores on the WSE can store a portion of the AI model, enabling simultaneous computation across the entire chip. This architecture drastically reduces latency and boosts throughput, allowing for significantly faster inference processing.

As Andy Hock, Cerebras’ Senior Vice President of Product and Strategy, explains, “We actually load the model weights onto the wafer, so it’s right there, next to the core.” This proximity of data to processing units ensures faster data access, which is critical for high-performance AI applications.

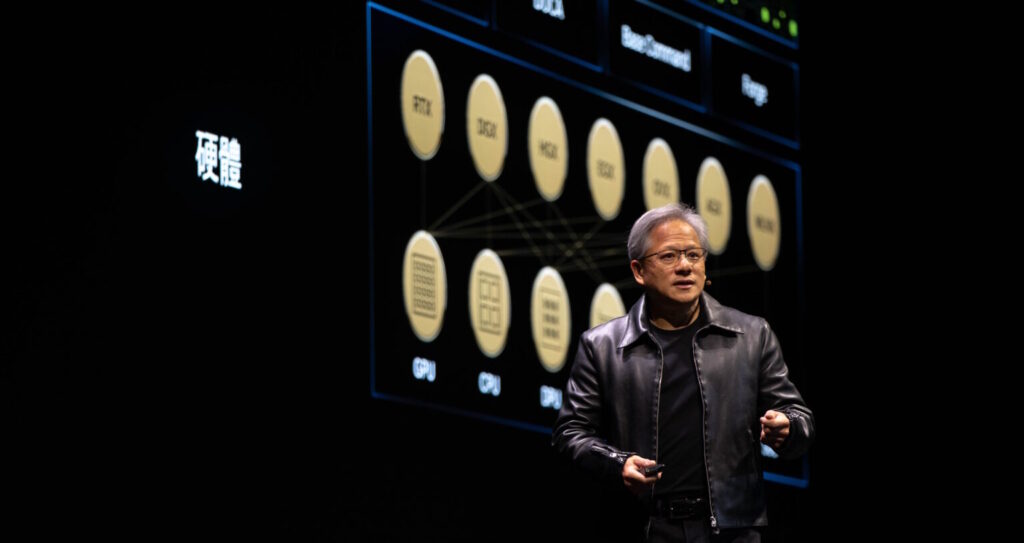

The Challenge to Nvidia’s Dominance

Nvidia’s leadership in AI hardware has been underpinned by its Compute Unified Device Architecture (CUDA), which has become the industry standard for parallel computing in AI. This ecosystem of tools and libraries has created a lock-in effect, making it difficult for competitors to break through, even with superior hardware solutions.

Cerebras’ unique architecture represents a direct challenge to Nvidia’s dominance. By offering a simpler, more efficient inference process that doesn’t rely on external memory, Cerebras is positioning itself as a viable alternative for organizations looking to scale their AI models without the limitations of traditional GPU-based systems.

While breaking Nvidia’s stronghold will be no easy feat, Cerebras is taking steps to smooth the path. The company is making it easier for developers to integrate its hardware into existing workflows by supporting popular AI frameworks like PyTorch and offering a software development kit that facilitates AI model development.

Strategic Leadership Lessons

Cerebras’ challenge to Nvidia offers several leadership insights that can be applied to business strategy:

Innovation Through Specialization: By focusing on a unique architecture tailored specifically for AI, Cerebras shows that specialization can disrupt even the most entrenched market leaders. Leaders should consider how focusing on niche areas of expertise can create breakthroughs in competitive industries.

Efficiency as a Competitive Edge: Cerebras has prioritized cost and energy efficiency, which are critical concerns for businesses at scale. Leaders in any industry should recognize the value of efficiency improvements as a way to enhance both performance and profitability.

Challenging Dominance Requires Differentiation: Competing against an industry giant like Nvidia requires not just innovation, but a fundamentally different approach. Cerebras’ wafer-scale architecture offers a clear alternative, providing an important lesson for leaders aiming to disrupt established markets.

IBG Insights

Adopt AI Inference Solutions for Real-Time Applications: Enterprises looking to gain a competitive advantage should explore AI inference solutions like those offered by Cerebras, which promise faster and more efficient processing for real-time applications.

Efficiency and Scalability Are Key to Future AI Success: As the demand for AI continues to grow, businesses should focus on solutions that optimize for both efficiency and scalability. Cerebras’ architecture points the way forward for organizations needing to manage large-scale AI deployments.

Monitor Industry Disruption for New Opportunities: The ongoing battle between Cerebras and Nvidia shows that even dominant players can be challenged by innovative newcomers. Leaders should keep an eye on industry disruptions and be ready to adapt when new opportunities emerge.

Cerebras Systems is taking a bold step in the AI hardware space, offering an innovative solution that could reshape how AI models are deployed and scaled. While the long-term success of the company will depend on real-world validation and market adoption, the potential for faster, more efficient AI inference is undeniable. As the AI landscape continues to evolve, companies like Cerebras are leading the charge toward the next generation of AI-driven innovation.

More insights into the boardroom

Join us in designing the future of leadership by connecting visionary companies with transformative leadership.

Quick Links

Lets Get In Touch

Corporate Office

Palo alto, California 94302

Office Location

500 Capitol Mall, Sacramento, CA 95814